Why Ragnerock?

The intelligence layer for your data stack

Unlock the power of data with agentic AI: Unify data mining, ingestion, parsing, analysis, and automation in one ecosystem. Built on top of your existing data infrastructure.

Data-driven AI

The economics of insight have changed.

It used to be expensive to extract structured information from unstructured data. Data was sourced from vendors, or, for high-value targets, with bespoke in-house pipelines. That investment was a moat, and only the best-resourced firms could afford to play.

"The work that used to take a team of MLEs six months can be done in an afternoon."

That constraint is gone. Frontier AI models can now do topic classification, sentiment analysis, entity extraction, document parsing, and information extraction out of the box.

- Earnings calls. Extract management sentiment, guidance changes, and topic-level analysis from thousands of transcripts automatically.

- Regulatory filings. Parse 10-Ks, 8-Ks, and proxy statements to extract risk factors, executive compensation, and material changes.

- Broker research. Structure sell-side reports into price targets, rating changes, and thesis summaries that flow directly into your research stack.

This changes the game. There's a vast reservoir of value sitting in data sources previously too expensive to tap systematically. That data is now accessible. Imagine what you could do with the power to mine it cost effectively at scale.

The new constraint isn't capability. It's infrastructure.

The models exist. Ragnerock gives you the platform to deploy them systematically, and with the orchestration, provenance, and data integration that production research requires.

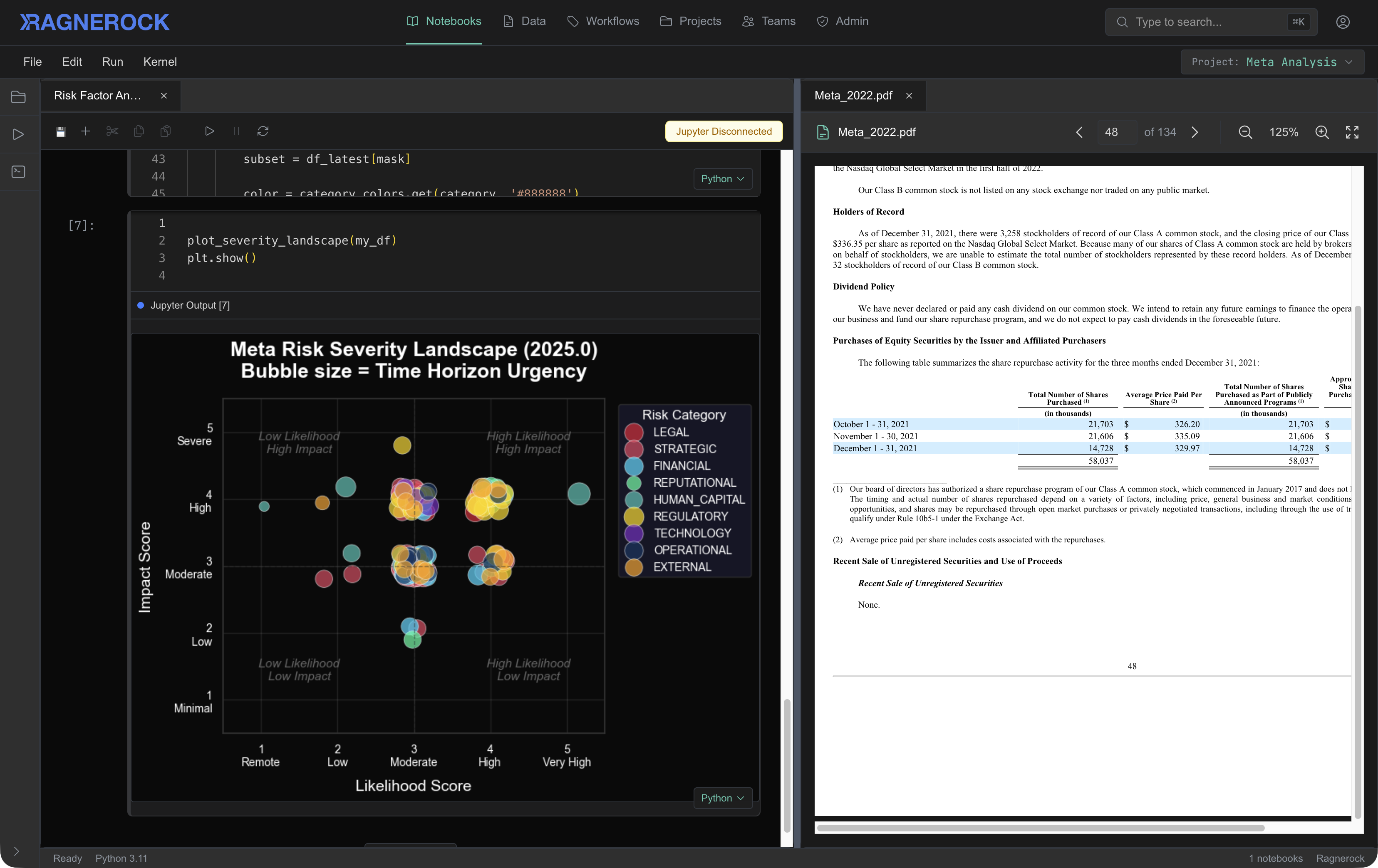

AI-native data analysis

Revolutionize your research the same way you've revolutionized your engineering.

Software engineering is in the middle of an AI-native revolution. Tools like Claude Code and Cursor have changed what a single engineer can accomplish, because they're built around the artifact engineers produce: code.

Quantitative research and data science are still waiting for their equivalent. And it won't come from the same tools, because even though quants might write Python all day, the artifact they produce isn't code. It's data. Structured datasets, derived features, annotated signals: code is the means; data is the end.

A coding agent can write you a script to plot a time series. It can't tell you what data you have available to plot, or how that data was derived, or whether the upstream extraction is still current. That's the gap. The tools that transformed software engineering are code-first. Quant research needs data-first tooling — built around the data context, not the notebook.

Meanwhile, forward-looking teams are building LLM-powered data processing pipelines. Scale those pipelines and unleash the power of your research teams with a unified research platform: a data-first, agentic workbench that empowers your quants to move faster and discover more.

Intelligence from your data

One platform. Every pipeline. Full auditability.

Ragnerock is an AI-native platform for building your firm's data ingestion, parsing, and analysis ecosystem. It sits on top of your existing data lake as the layer where your unstructured data processing lives — and bridges those outputs with the structured data you already have.

Traditional approaches force a choice: buy pre-packaged data products that don't quite fit your research questions, or build and run custom in-house data ingress pipelines. Ragnerock eliminates that tradeoff.

Define your analytical logic once. The platform handles document parsing, chunking, orchestration, and persistence. Your team focuses on the research question, not the infrastructure. If a hypothesis doesn't pan out, you've spent minutes configuring a workflow — not months building a pipeline.

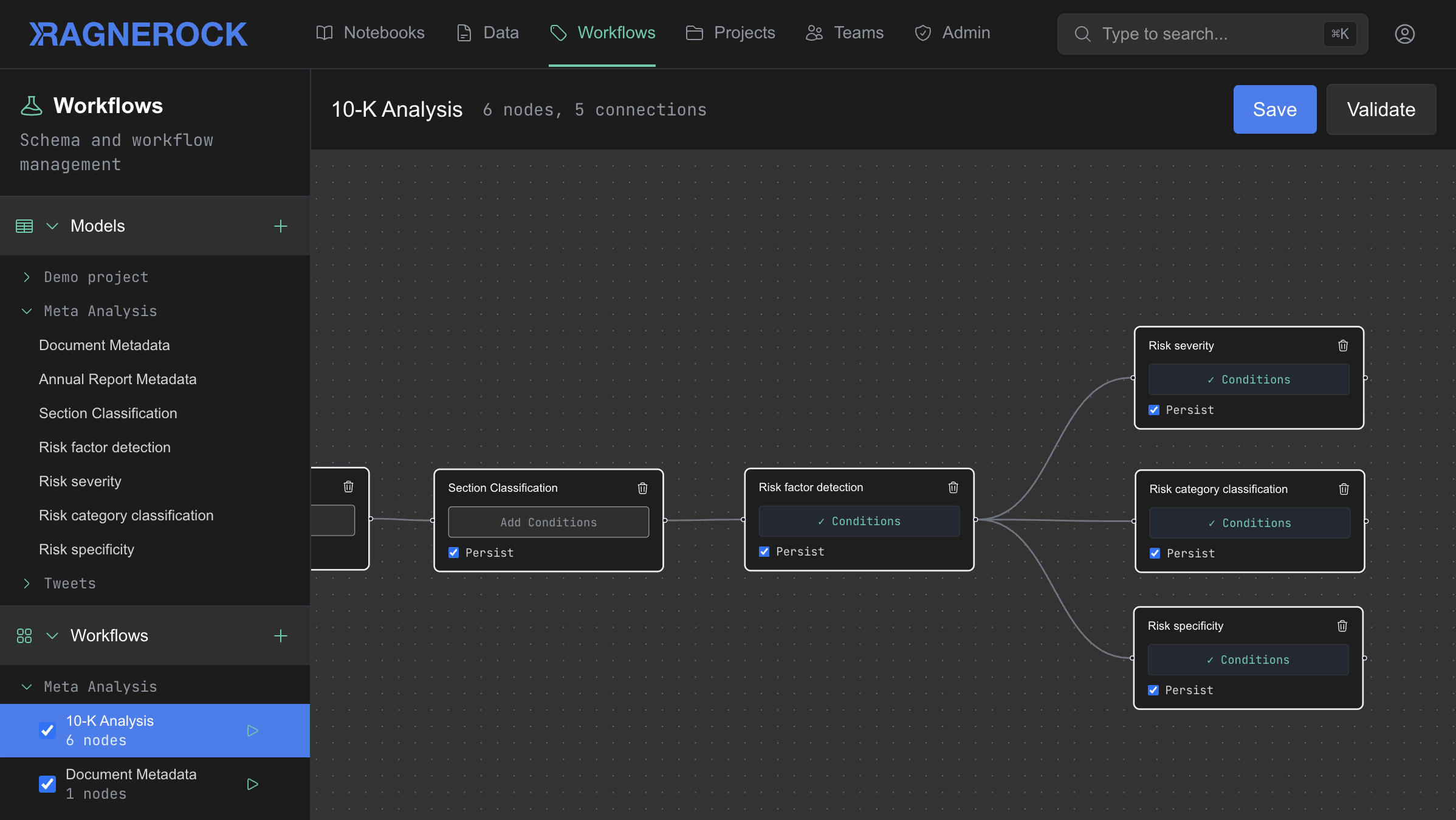

- Define

- Define the analytical flow using composable AI agents. Each operator is a node in a DAG with a defined data contract: what it receives, what it produces, validated against JSON Schema. Chain them into multi-step pipelines that go far beyond simple extraction.

- Orchestrate

- Orchestrate workflows that run automatically when new data arrives, or trigger manually. Process thousands of documents through the same analytical pipeline without writing orchestration code.

- Persist

- Persist results as structured, queryable annotations in your data lake. Every output links back to the specific source document, page, and passage it came from. Which operator produced it, which model, which prompt — the full chain of custody, recorded automatically.

- Query

- Query with standard SQL, semantic search, or a conversational research agent. Millisecond response times. Deterministic results. No LLM running at query time. Join AI-derived intelligence with the rest of your data using tools your team already knows.

From prototype to production, no handoff

The workflow you build to explore a hypothesis is the same workflow that runs in production. No reimplementation, no translation between research code and production systems. When your research team validates a signal, it's already running at scale.

Explore new frontiers

Capabilities that change how your team works with data.

Research velocity

Explore more hypotheses, faster.

Investigating a new data source used to mean weeks or months of pipeline engineering before you could even evaluate the signal. With Ragnerock, that collapses to days of workflow configuration. The research ideas that weren't worth the effort at $50K of engineering time become worth investigating at $2K.

Your logic, your schema

Intelligence built on your questions, not a vendor's.

When you buy structured data from a vendor, you inherit their schema, their methodology, and their update cadence. Ragnerock lets your team define the extraction logic from scratch: what gets pulled, how it's structured, when it refreshes.

Full provenance

Auditable by design.

Every annotation traces back to source. Every workflow step is logged. Every model call is recorded. When your risk team, your compliance team, or a regulator asks how a data point was derived, the full chain of custody is already captured.

Augment your data lake

Additive to your stack. Not a replacement for any of it.

Ragnerock outputs flow directly into the data infrastructure you've already provisioned and audited. Source documents stay in your buckets, under your security policies. Bring your own API keys for the AI providers you already trust.

The notebook interface integrates with Jupyter. Your team's existing Python workflows keep working. Ragnerock adds the data layer underneath — so your quants can go from exploring a hypothesis conversationally to pulling structured results into a dataframe without switching tools.