The Platform

From raw data to queryable intelligence. One platform.

Ragnerock gives your team four building blocks: AI agents that extract structured data from any source, workflows that orchestrate them, a SQL query layer over the results, and a notebook environment that bridges AI-powered research with your existing Python workflows.

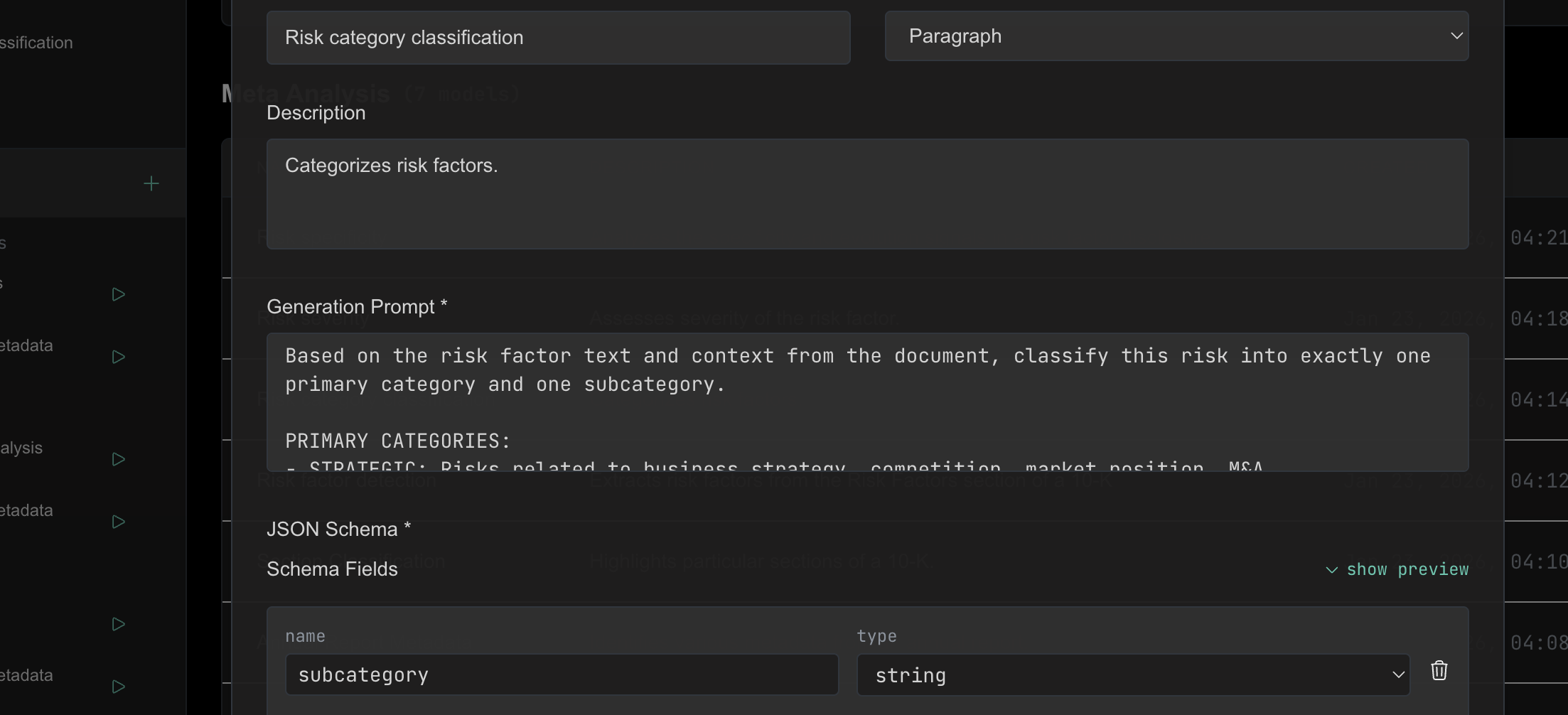

AI Agents with Data Contracts

Operators

Each operator is an AI agent with a defined data contract: what it receives, what it produces, validated against JSON Schema. Extract sentiment, classify risk factors, parse financial statements, or build something entirely novel for your research question. Operators can call external tools, apply business logic, or run custom code. You define the analytical task; Ragnerock enforces the contract.

Learn more

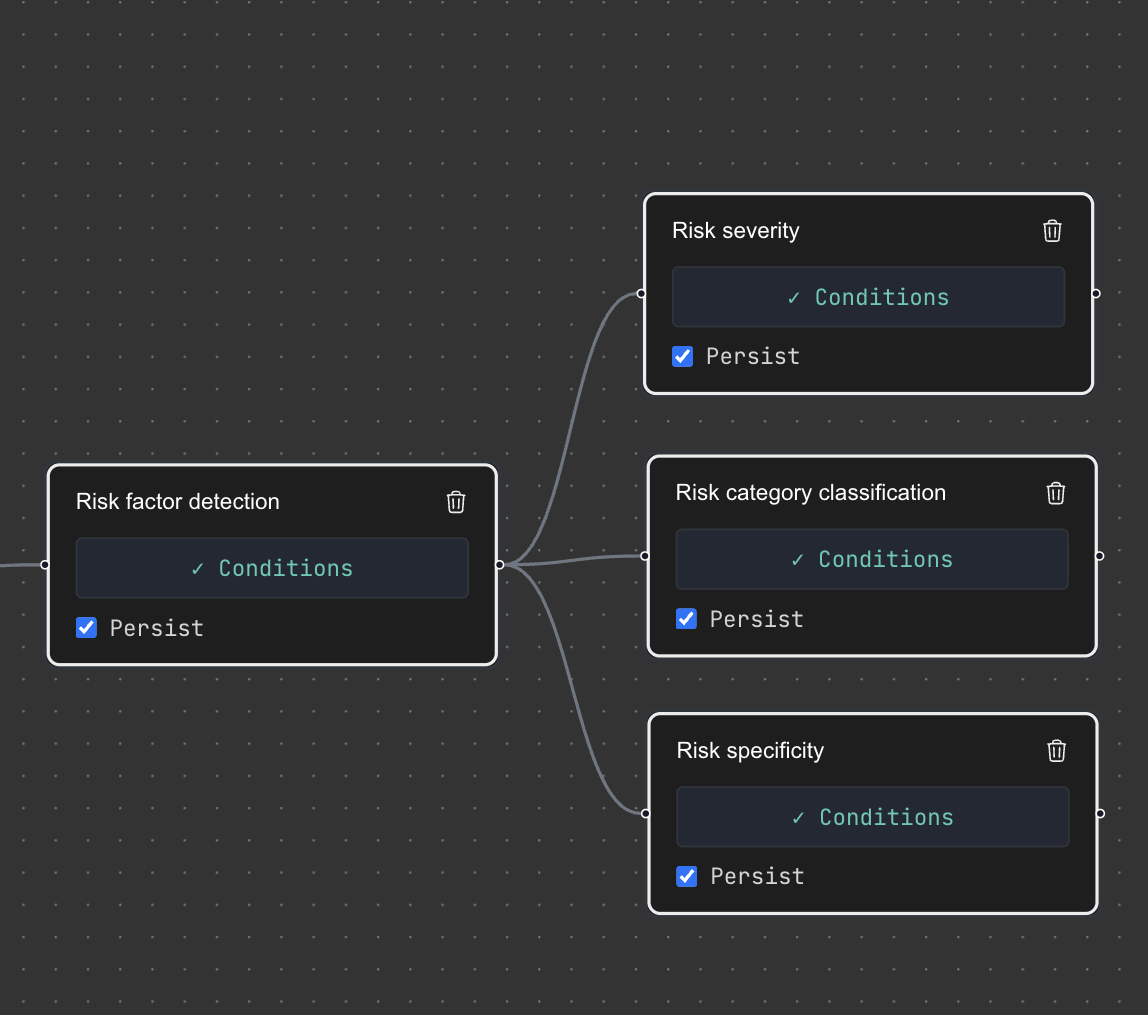

Composable Pipelines

Workflows

Workflows are DAGs of operators that define your full analytical flow. Go beyond single-step extraction: identify relevant sections of a document, segment them, run specialized analysis on each segment, call market data tools, benchmark results. Workflows trigger automatically when new data arrives or run on demand. The entire pipeline is versioned and auditable.

Learn more

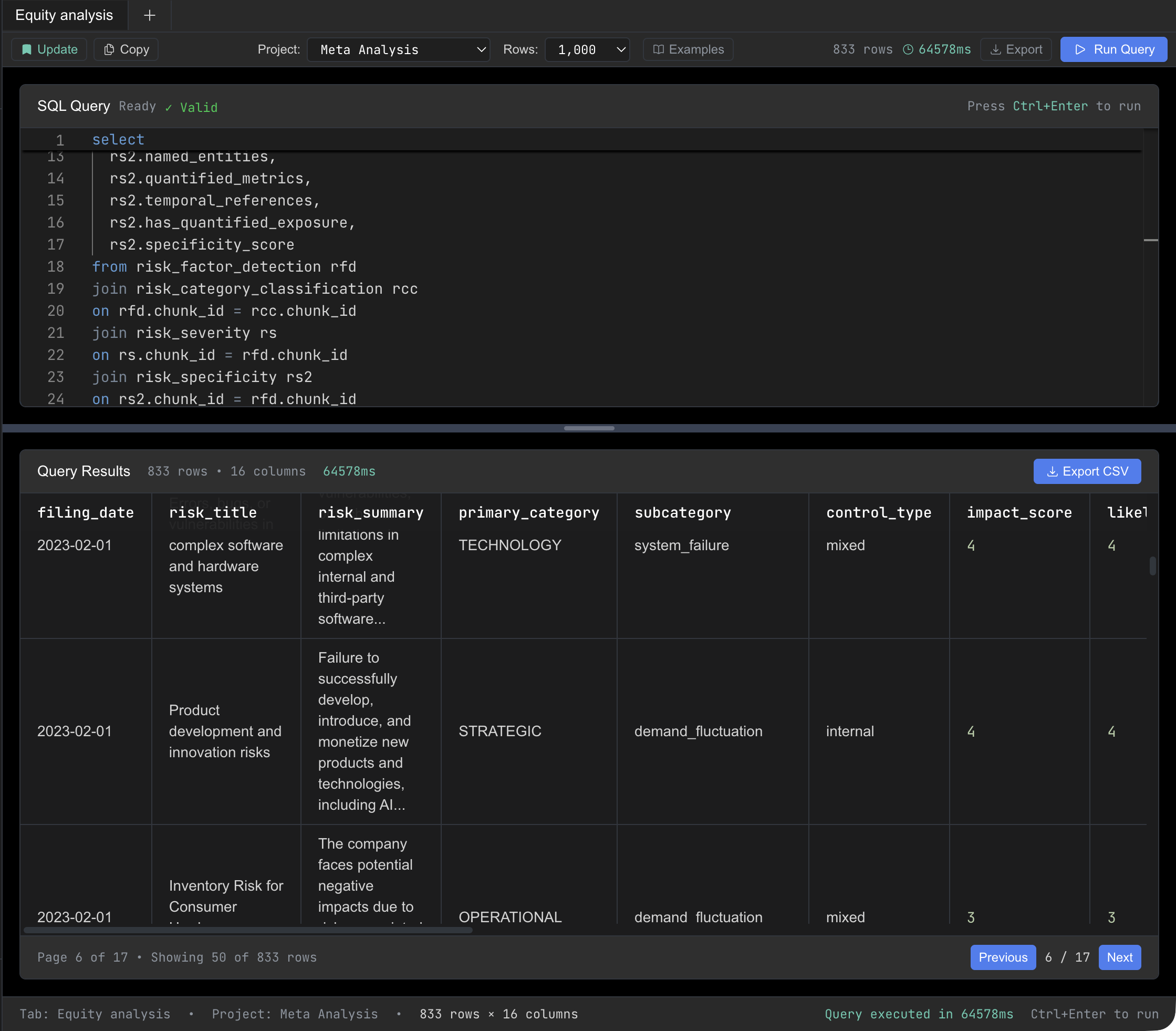

SQL Over AI Outputs

Queries

Every operator's results are exposed as SQL tables. Query, filter, join, and aggregate your AI-derived data with standard SQL, or search semantically when you need flexibility. Millisecond response times on pre-computed annotations. No LLM running at query time. Join AI-derived intelligence with the rest of your data lake using the syntax your team already knows.

Learn more

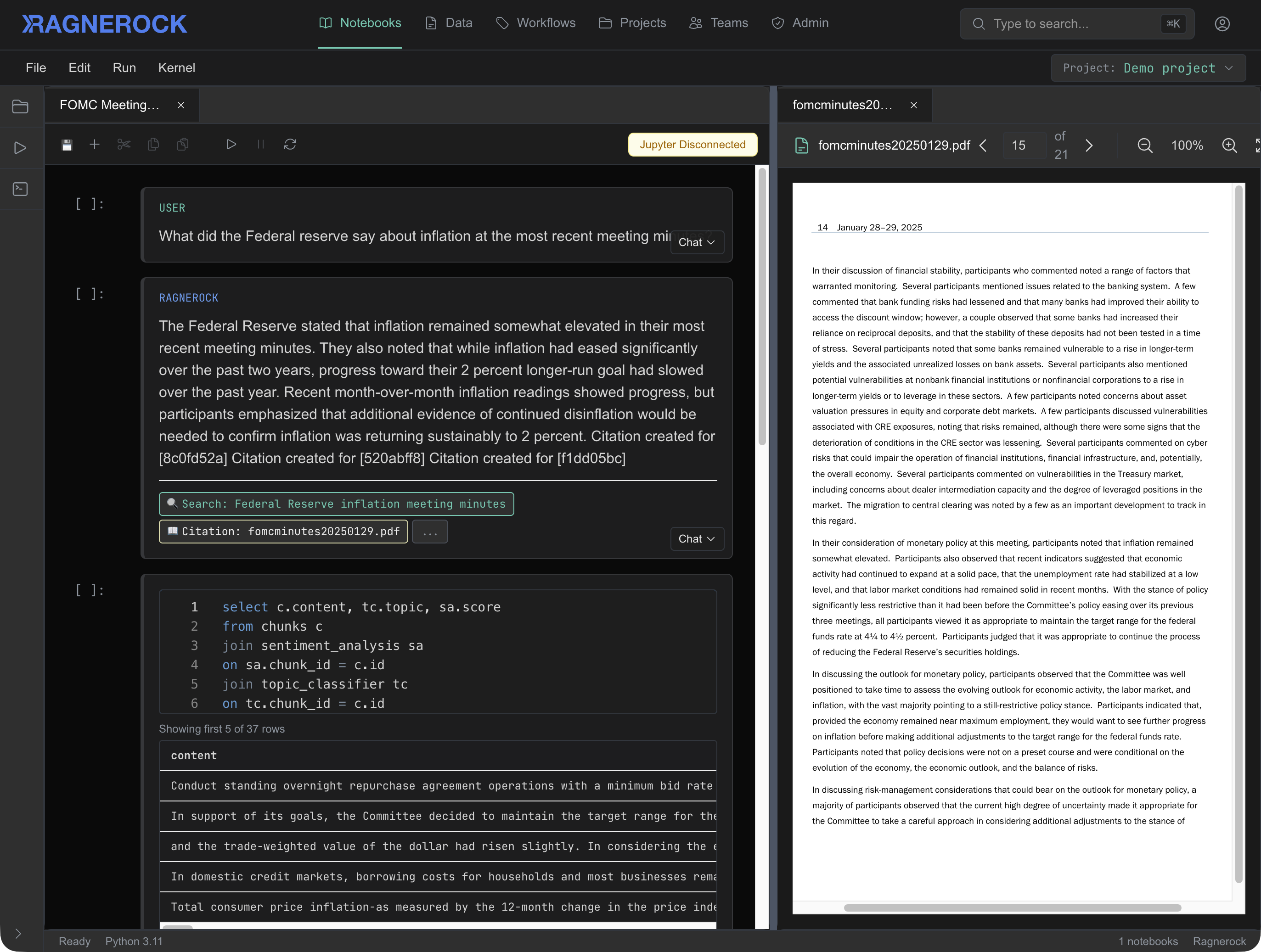

Where Research Happens

Notebooks

Notebooks bridge Ragnerock with your existing research environment. Run SQL queries and pull results directly into Python dataframes. An AI research agent can search across your entire document universe, answer questions with citations, and help you explore data conversationally before you commit to code. Integrates with Jupyter, so your team keeps working in the tools they already use.

Learn moreArchitecture

Process once. Query infinitely.

Most AI data tools run inference on every query. Something like SELECT ai_analyze(text) FROM documents — each row, each query, each time. This is slow (seconds per call), expensive (you're paying for LLM inference on every request), and non-deterministic (the same query can return different results on different days).

Ragnerock separates the extraction step from the query step. AI processing runs once, when data enters the system through a workflow. The results persist as structured, schema-validated annotations. Every query downstream hits pre-computed data at millisecond latency using standard SQL.

The economics follow directly. For data you query regularly — which is most research data — costs scale with data volume and extraction complexity, not with how many times your team queries it. For active research teams, this typically means a 5-10x reduction in AI-related costs compared to per-query inference.

SELECT ai_analyze(transcript, 'extract sentiment')

FROM earnings_calls

WHERE date > '2025-01-01';SELECT sentiment_score, key_topics, risk_flags

FROM earnings_calls_annotations

WHERE date > '2025-01-01';Bring Your Own Infrastructure

Your data stays in your stack

Ragnerock connects to the AI providers, databases, and cloud storage your firm already uses. Annotation outputs flow directly into your data lake. No vendor lock-in, no data migration, full control.

- AI Providers.

- Connect your existing API keys for OpenAI, Anthropic, Google, or xAI. Use the models you trust without switching providers.

- Databases.

- Export annotation data directly to PostgreSQL, Snowflake, Databricks, or BigQuery. Your structured outputs live where your analytics already run.

- Cloud Storage.

- Store documents in AWS S3, Google Cloud Storage, or Azure Blob Storage. Ragnerock works with your existing buckets and security policies.

Ingest anything

All your data sources

Ragnerock handles virtually any data format your research requires, from documents and spreadsheets to web content and databases.

- Text & Markdown

- Plain text files, Markdown documents, and rich text formats for seamless content ingestion.

- PDF Documents

- Extract text, tables, and images from PDF files with intelligent layout preservation.

- Word Documents

- Full support for .docx files including formatting, tables, and embedded content.

- Excel Spreadsheets

- Import spreadsheets with full structure preservation across multiple sheets and formats.

- HTML & Web Scraping

- Ingest web pages directly or set up automated scraping pipelines for continuous data collection.

- Image & Video

- Process visual content with AI-powered extraction for charts, diagrams, and multimedia files.

- Dataframes & CSV

- Native support for CSV, Parquet, and other tabular formats used in data science workflows.

- SQL Databases

- Connect directly to PostgreSQL, MySQL, and other databases to query and ingest structured data.

See it in action.

Explore the platform yourself, or talk to us about how Ragnerock fits your firm's research workflow.